Gamified Sign Language Learning Experience

Creating a VR application for teaching sign language to the hearing community, to encourage inclusivity and bridge barriers.

Capstone Project - VIT Chennai

Implemented gesture recognition, gamification techniques and dynamic difficulty adjustment to create a VR application for teaching sign language to the hearing community.

My thesis compared the traditional learning method, along with a randomized game-based method and two dynamic difficulty adjustment algorithms and measured factors like accuracy, time, knowledge retention, player feedback, and motivation and engagement levels.

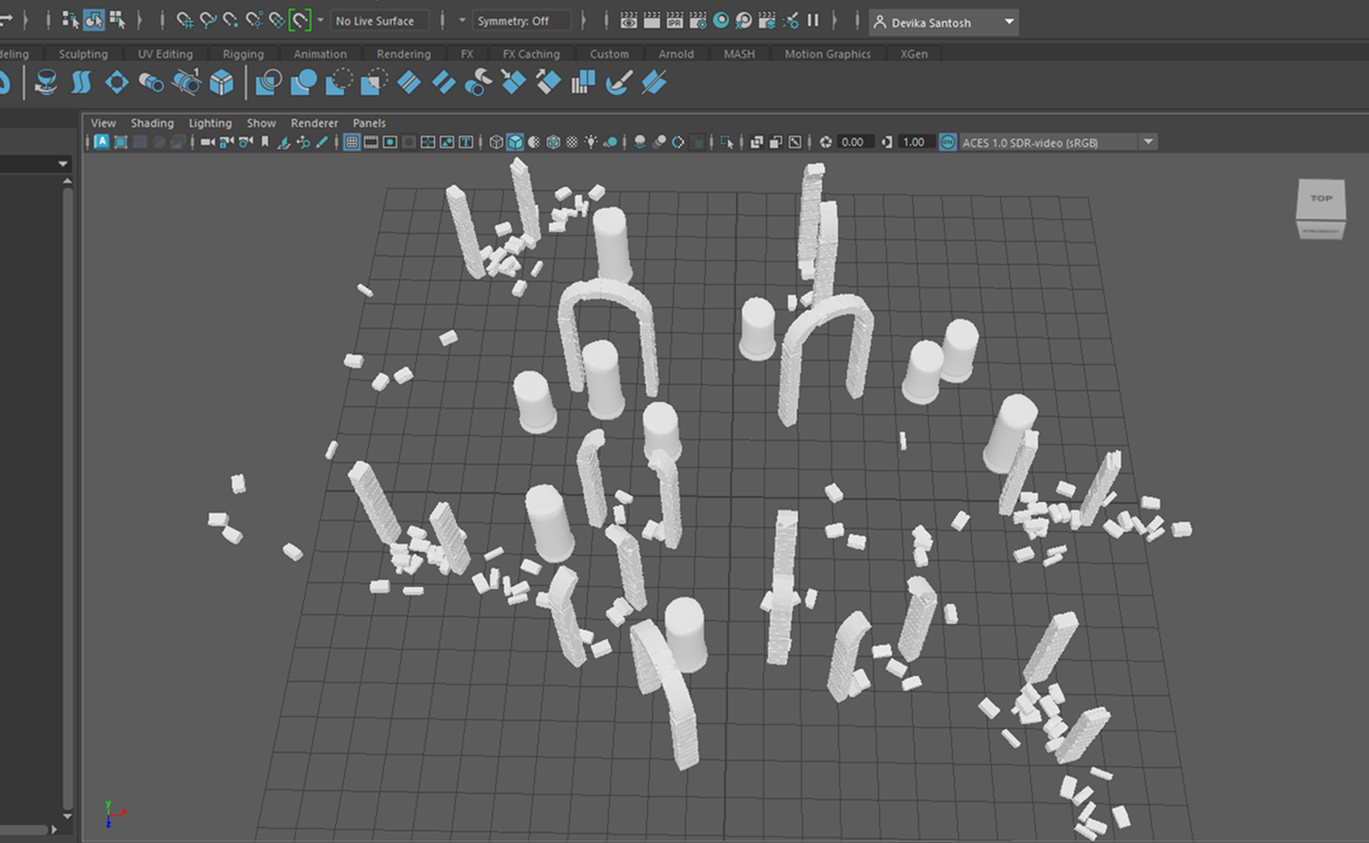

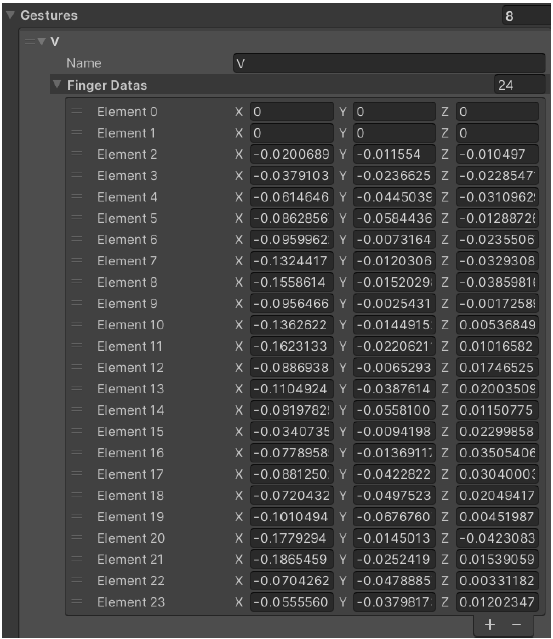

Created hand gesture recognition that maps the coordinates of the hand skeleton finger bones, and saves these

coordinates for each gesture. User can add as many gestures as required for either hand.

Storing the coordinates of each gesture's Oculus hand skeleton finger bones for recognition

Created 2 modes: practice and test. Practice round is more forgiving, displays the hand gesture and shows if the user’s response is correct or not, and lets them retry until it is correct.

Practice mode - teaches by displaying the hand gesture and allows retries

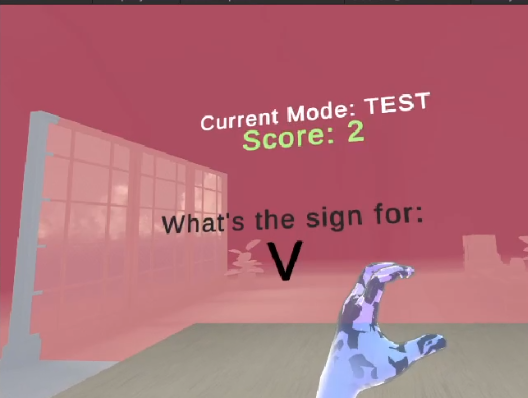

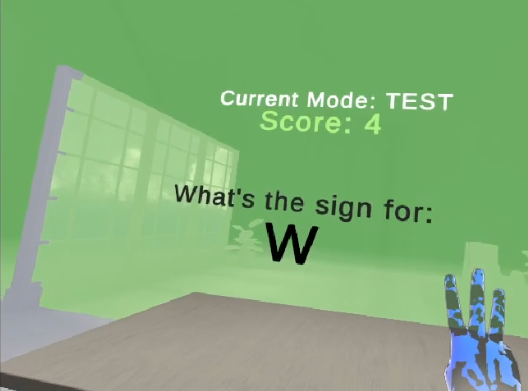

One of the 3 variations of test mode

Test mode has 3 types -

- randomized,

- linear algorithm for dynamic difficulty adjustment, and

- an exponential dynamic difficulty adjustment algorithm.

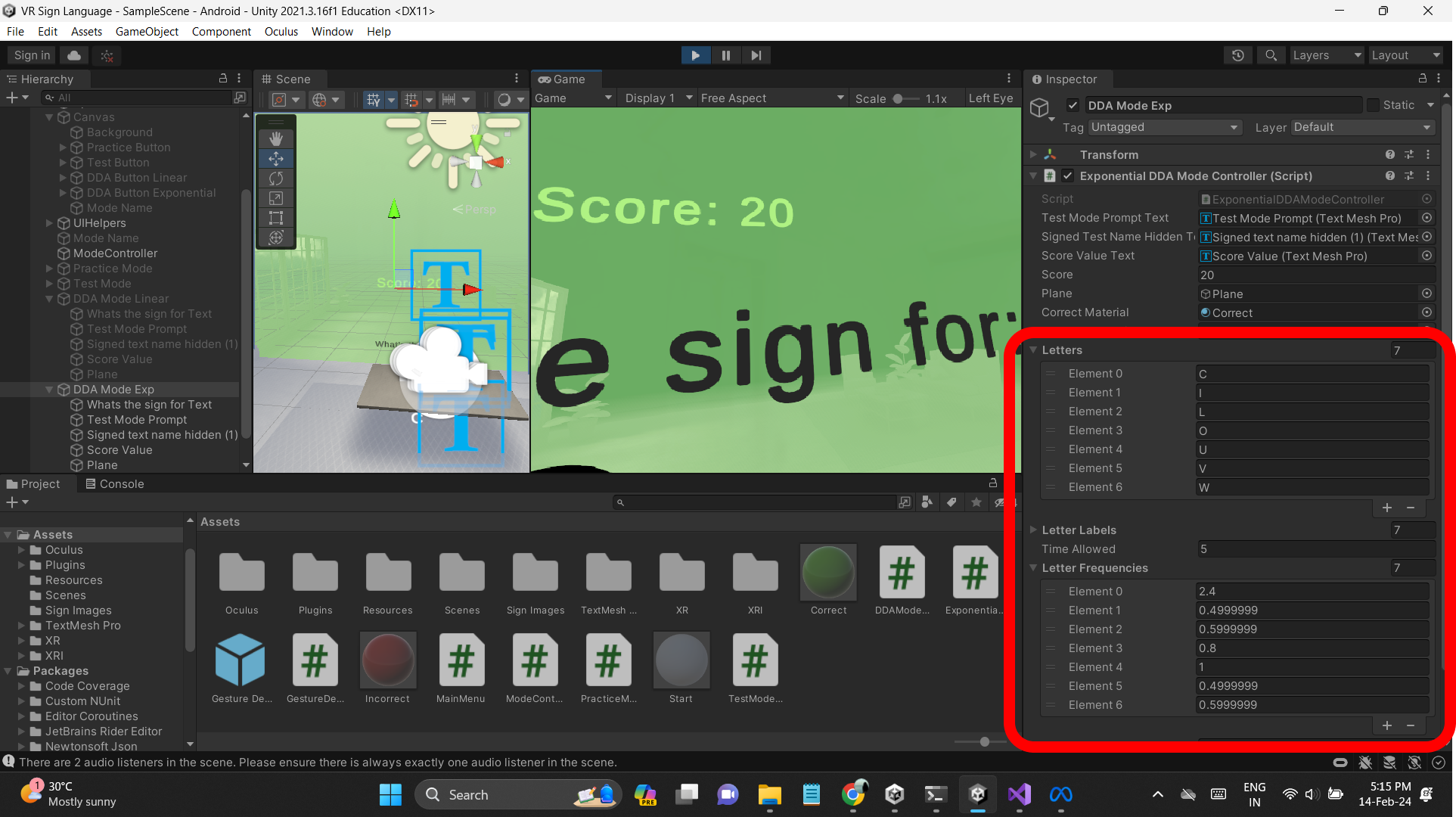

The dynamic modes would increase the frequency of questions being asked if the user answers them wrong, and decrease the frequency of the answers users seem to know, thus creating a more personalized learning experience.

Frequency of questions changing dynamically based on an exponential algorithm

- Time: 1 semester

- Software and Platforms: Unity Engine, Oculus Quest 2

Technologies Used

- Unity

- C#

- Oculus Quest 2